Overview

The main feature added in Artifactory 3.1 is support for a High Availability network configuration with a cluster of 2 or more, active/active, read/write Artifactory servers on the same Local Area Network (LAN).

This presents several benefits to your organization and is included withArtifactory Pro Enterprise Value Pack.

Benefits

Maximize Uptime

Artifactory HA redundant network architecture means that there is no single-point-of-failure, and your system can continue to operate as long as at least one of the Artifactory nodes is operational. This maximizes your uptime and can take it to levels of up to "five nines" availability.

Manage Heavy Loads

通过使用冗余阵列of Artifactory server nodes in the network, your system can accommodate larger load bursts with no compromise to performance. With horizontal server scalability, you can easily increase your capacity to meet any load requirements as your organization grows.

Minimize Maintenance Downtime

By using an architecture with multiple Artifactory servers, Artifactory HA lets you perform most maintenance tasks with no system downtime.

Architecture

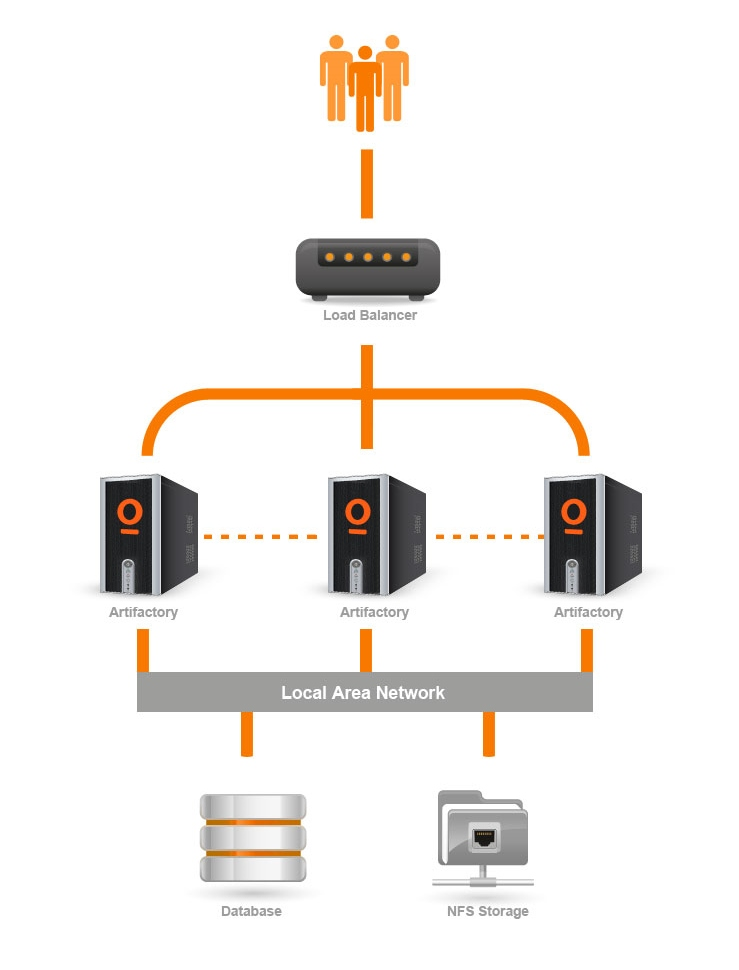

Artifactory HA architecture presents a Load Balancer connected to a cluster of two or more Artifactory servers that share a common database and Network File System. The Artifactory cluster nodes must be connected through a fast internal LAN in order to support high system performance as well as to stay synchronized and notify each other of actions performed in the system instantaneously. One of the Artifactory cluster nodes is configured to be a "master" node. Its roles are to execute cluster-wide tasks such as cleaning up unreferenced binaries.

JFrog support team is available to help you configure the Artifactory cluster nodes. It is up to your organization's IT staff to configure your load balancer, database and network file system.

Network Topology

Load Balancer

The load balancer is the entry point to your Artifactory HA installation and optimally distributes requests to the artifactory server nodes in your system.

Your load balancer must support session affinity (sticky sessions) and It is the responsibility of your organization to manage and configure it correctly.

The code samples below show some basic examples of load balancer configurations:

More details are available on thenginx website.

Artifactory Server Cluster

Each Artifactory server in the cluster receives requests routed to it by the load balancer. All servers share a common database and NFS mount, and communicate with each other to ensure that they are synchronized on all transactions.

Local Area Network

To ensure good performance and synchronization of the system, all the components of your Artifactory HA installation must be installed on the same high-speed LAN.

In theory, Artifactory HA could work over a Wide Area Network (WAN), however in practice, network latency makes it impractical to achieve the performance required for high availability systems.

Shared Network File System

Artifactory HA requires a shared file system to store cluster-wide configuration and binary files. Artifactory HA requires that your shared file system supportsconcurrent requestsandfile locking.

Currently, the only shared file system that has been certified to work with Artifactory HA and is supported isNFS(版本3和4).

Mounting the NFS from Artifactory HA nodes

When mounting the NFS on the client side, make sure to add the following option for themountcommand:

lookupcache=none

This ensures that nodes in your HA cluster will immediately see any changes to the NFS made by other nodes..

Database

Artifactory HA requires an external database, and currently supports MySQL, Oracle, MS SQL and PostgreSQL. For details on how to configure any of these databases please refer toChanging the Default Storage.

Since Artifactory HA contains multiple Artifactory cluster nodes, your database must be powerful enough to service all the nodes in the system. Moreover, your database must be able to support the maximum number of connections possible from all the Artifactory cluster nodes in your system.

If you are replicating your database you must ensure that at any given point in time all nodes see a consistent view of the database, regardless of which specific database instance they access.Eventual consistency, and write-behind database synchronization is not supported.